DevOps On AWS

Almost everything is moving to the cloud. Depending on your specific needs, some of your workloads and processes may need to be transferred or fully migrated to the cloud. However, not every use case requires AWS. Your current requirements might be adequately met by your existing setup. So, how can you determine when it’s time to adopt the cloud, and which tools should you consider?

Before making any financial commitments, it’s important to identify the problem and develop a solution. Today’s challenge is how to incorporate automation techniques and tools to reduce deployment times and increase the efficiency of the software development cycle.

It’s important to consider all aspects of the problems you’re trying to solve before making any financial commitments. As always, you should choose the best tool for the job.”

TLDR: You can jump to the Architectural Deep Dive of this article to get the goods.

A quick peek at the tech stack:

AWS Services:

- AWS Code Pipeline

- AWS Code Build

- AWS Code Deploy

- Amazon CloudFront

- Amazon S3

- Amazon EC2

- Amazon Route 53

- AWS Certificate Manager

Infrastructure As Code:

- Terraform

Back-end Development:

- Nginx

- Docker

- Node.js and Express.js for Web server hosting

- Apollo GraphQL Server for REST API endpoints

- MongoDB for storing user, room ID, and Geolocation data. (more on this later)

- Socket.io

Front-end Development:

- React Library

- React Leaflet for React components for Leaflet maps

- GraphQL Client

- Material UI React component library

If you’re interested in learning more or need consulting services on how to manage, scale, applications, and infrastructure on AWS please don’t hesitate to get in touch.

DevOps and CI/CD on AWS

Let’s imagine your company has one or more legacy production environments running on-premises or with a different web hosting provider. While the technology remains unchanged, your CTO has decided it’s time to evolve and adopt a modern tech stack. A good starting point for this transition is to become acquainted with DevOps and CI/CD methodologies.

Note: DevOps is usually implemented in larger organizations and with larger teams. If you’re running a website on Godaddy then DevOps and CI/CD may not be for you at the moment. The operational overhead will break your bank.

So, what are DevOps and CI/CD and why do we need them:

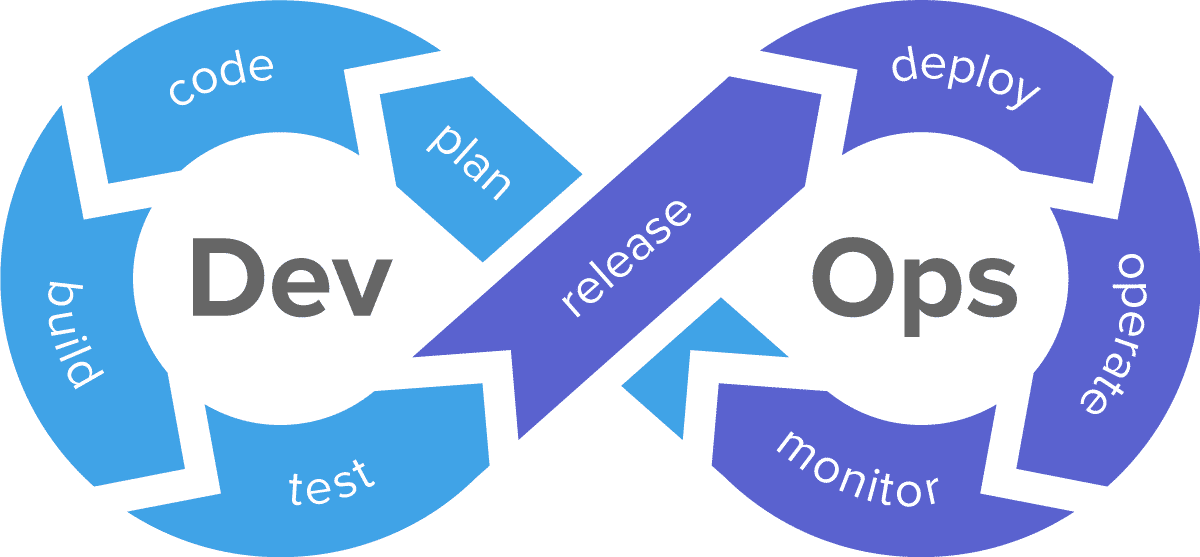

DevOps is a holistic business practice that combines people, technologies, cultural practices, and processes to bring previously siloed teams together to deliver better software faster. Successful DevOps implementations are viewed as an organizational change when it comes to software development. Learn about DevOps and how to optimize software delivery with DevOps throughout the software development lifecycle (SDLC). — github. com

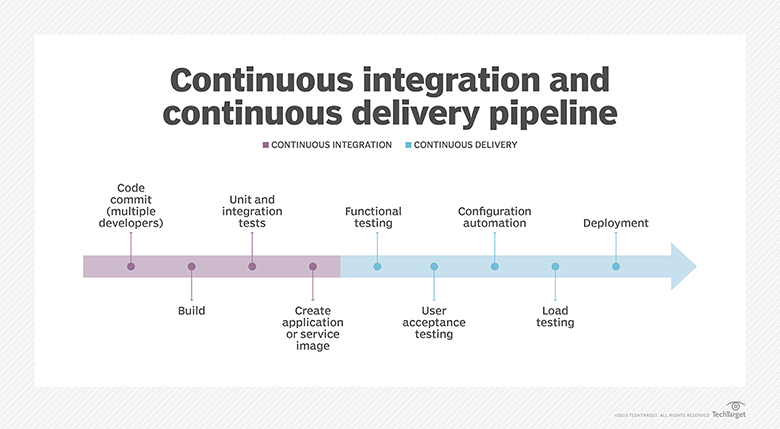

Automation is a core principle for achieving DevOps success and CI/CD is a critical component. CI/CD comprises continuous integration and continuous delivery or continuous deployment. Put together, they form a “CI/CD pipeline”—a series of automated workflows that help DevOps teams cut down on manual tasksAutomation is a core principle for achieving DevOps success and CI/CD is a critical component. CI/CD comprises continuous integration and continuous delivery or continuous deployment. Put together, they form a “CI/CD pipeline”—a series of automated workflows that help DevOps teams cut down on manual tasks — github. com

Deployment

Now that the CTO signed off on moving to the cloud and the team has a Google handle on DevOps and CI/CD training we can move forward with utilizing modern tooling.

Before my AWS journey, the first iteration of this project was architected using a monolithic approach. After using AWS for three years, I identified areas for improvement and ways to distribute the computing workloads using specific AWS services. Let’s dive deeper and explore some architectural enhancements.

Note: An argument can be made to continue using a monolith architecture in combination with microservices. Again, this would all depend on your current workload.

Architectural Deep Dive

Docker development

In my Activity Tracker web application, I create two containers: one for the MongoDB database and Node.js server, and another for the Socket.io server. You also need to consider the level of abstraction you want. Too much decoupling can create an overly complicated architecture, prone to bugs and over-provisioning of AWS resources.

Because teams are spread out and development environments vary across different operating systems, containerizing your applications with Docker ensures consistency throughout the software development life cycle.

Tips:

- Consider creating custom base images.

- Keep your production images lean (cut the fat).

- Tag your images and keep track of versioning, for example, Prod

- Staging, Development, and so on.

- Make your containers stateless and persist critical data in another database or store in case of an outage.

- Use security best practices by utilizing managed secret stores. For example, Amazon uses AWS Secrets Manager.

After building and creating images for each back-end service, you can use AWS Elastic Container Registry (ECR) to store your images. Then, use the AWS CLI to pull those images into the EC2 instances.

GitHub or GitLab

It’s 2024. Choose one.

At the time of this writing, AWS only supports GitHub and GitHub Actions.

Tip:

- Include the appsec.yaml file in your root project. More on this later.

AWS CloudFront

I used AWS CloudFront to create a static website for the React frontend. A single-page application is perfect for this use case. More on building a production version of this website will be covered later.

- No managing of servers

- It scales worldwide

- Integrated with many Aws services

- Highly Cost effective

AWS CodePipeline

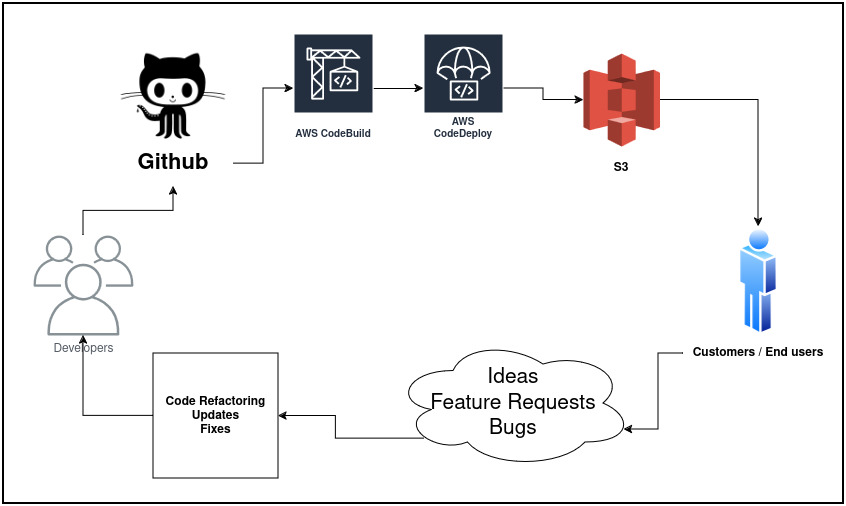

Finally, after ensuring the front-end is communicating with the back-end services, it’s time to create a production-ready pipeline to continuously update our front-end application. I chose to use CodePipeline for the front-end for now, but you can also apply these steps for building containers and pushing them to Amazon Elastic Container Registry.

Ensuring that you have an appsec.yaml file in your root project directory, you can now start a new CodePipeline project. In conjunction with CodePipeline, you create deployment steps which are as follows:

- Source: In this step, you connect your GitHub account and detect any new commits, pushing commits to your repository.

- Build: This step uses your command line to execute any pre and post build instructions. For example, we can create a React SPA production build folder.

- Deploy: Finally, we can take our artifact, the build folder, then use the AWS CLI S3 commands to copy all the items in the build folder to the S3 bucket that’s a static website.

Infrastructure As Code

For the cherry on top, I took the Front-end a little step further and used Terraform CLI tool to stand up and spin up AWS resources, specifically targeting the AWS CloudFront, AWS Certificate Manager, and Amazon Route53. You can also save this file on GitHub and use AWS CodeDeploy with Terraform to further automate the infrastructure process.

There is plenty of room for improvement and I plan to adjust, refine and polish these steps if it makes sense for the business and for the organization. All these tools and methodologies really start to shine when you and your team have manage hundreds of AWS accounts and Production environments which are accessed by hundreds of end-users. But that’s for another article.

And here is the a quick video of the finished product.